Azure Data Factory V2– Pipeline Functional Testing

This article helps you to understand functional testing with pipelines in Azure Data Factory.

Note:-

This article applies to Azure Data Factory V2.

This article assumes that you have gone through Introduction to Azure Data Factory and the QuickStart Tutorial.

Credits – Thanks to ADFv2 Product Group (Anudeep Sharma, Hermine Vermishyan & Abhishek Narain ) for reviewing and all assistance with my ADFv2 and my partner in awesomeness David Kuo for testing the script

Overview

During development phase of an Azure Data Factory pipeline you have a debugging functionality in UX which you can use to debug a pipeline and find out if anything is failing due to reasons like incorrect connection string, object not present in database, wrong query, etc.

Keeping same idea in mind I have extended the functionality of debugging from pipelines’ development stage to release stage, where you can use PowerShell script to perform pipelines’ functional testing in Test or Integration or UAT environment ensuring all issues are caught at that stage instead of hitting in Production environment and in this article series will share the process of achieving same with PowerShell script and good news is that you can use same script in your environment without making any code change.

Concept

Function test with ADF can be used to validate if pipeline is working and if anything related to functionality is failing then stop release, fix issue and iterate. It’s more aligned with build verification ensuring objects like linked service, tables, storage, etc. which are required for a pipeline are successfully validated before releasing to production environment.

Scope

Testing functionality of pipelines ensuring configurations like databases, server, storage, etc. are configured correctly and pipeline is not failing due to misconfiguration like database not exist or something on same lines. Errors due to data quality, performance or anything related to data sanctity is outside the scope of functional testing.

How it Works

Let’s go in details of understanding working of functional testing with ADFv2.

First of all you need to understand how debugging works in pipeline and good news is that there is very informative video done by Gaurav Malhotra from ADF team (highly recommended if you want to get insight of pipeline debugging): Iterative development and debugging using Data Factory

As you have seen in this video that for debugging you need to run a pipeline and for running a pipeline it requires parameters defined for your pipeline and run the pipeline in debug mode with an output showing whether pipeline succeeded or failed, keeping same approach in mind I attempted to write this functionality with following flow:-

Functional Testing Flow: –

Now let’s look into each step in this flow:-

1. Azure Active Directory (AAD) Authentication – AAD is required for silent authentication of PowerShell script which is used during automation of testing and PowerShell script needs to access Azure Data Factory for getting list of pipelines so we need to ensure Application ID should also have access to Azure Data Factory. There are few handy articles which you can follow for creating Application ID mentioned as follow:-

a. How to create an AAD application and service principal

b. How to give access to Application ID

i. Go to Azure Data Factory in Azure Portal

ii. Under All settings select Users and click Add as shown in following figure: –

Settings: –

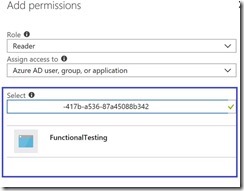

iii. After hitting Add it will open another windows which will allow you to add Application ID which you had crated in Step1, as there is need of only getting list of pipelines so we are making Application member of Reader Role as shown in following figure where Application name is FunctionalTesting and added member of Reader Role:-

iv. In PowerShell we will be leveraging Application ID for silent authentication and its recommended to use Key Vault for pulling secrets in this case we need a Application ID secret (password), in PowerShell I have parameterized secret field so you can either pass it as plain text or make a call to Key Vault during release process and pass along the secret. Code snippet as follow in which we are doing silent authentication using Application ID which is also knowns as Service Principal, you can refer this article for getting TenantID

2. Functional Testing Logic –

a. Get list of Pipeline from ADF

b. Check for Pipeline Exclusion

Example of exclusion file:-

c. Pipeline Test file – Following is an example of test file, in my case I have a pipeline named as pl-testValidation in data factory and that pipeline expects a parameter known as SubFolder, I need to ensure then I am creating a parameter file with respect to my pipeline which will be used a functional test file by testing logic, also need to ensure that test file name matches with the pipeline name and should have all parameters stated in a pipeline, and if this file is missing then it will be marked as failed with missing test file

Example of test file as the pipeline name is pl-testValidation so I have created test file as pl-testValidation.json (PipelineName.json) with a parameter consumed by pipeline and TestTimeout value as I want to run this test for 80 seconds, if I will miss this value then it will default to 30 seconds.

d. In following code if you notice we are iterating through pipelines and checking if PipelineName.json exist and also checking what is the TestTimeout value if missing then defaulting it to 30 seconds.

e. Running Pipeline test by using following command

f. Using value of $runId to get current status (refer GIT – PS script for full code)

g. Using value of $runId to get status (refer GIT – PS script for full code)

h. Fail test if it exceeds timeout setting

i.If you are integrated with Release Manager then it will generate exception in-case of failure resulting in failed release and give an output of failed pipelines which needs to be fixed before deploying to production environment but don’t be dis-heartened you can still code even though you are not using Release Manager by using following command.

.\AzureDatafactoryFunctionalTesting.ps1 -dataFactoryName "Your Factory Name" -resourceGroupName "Factory Resource Group Name" -azureSubscription "Subscription ID" -applicationSecret "Application Secret from Point 1" -applicationID "Application ID from Point 1" -tenantID "Tenant ID from Point 1" -excludedPipelinesFilePath ".\" -functionalTestJsonFilePath ".\"

Conclusion

As you have seen in this article I have gone through step by step of achieving functional testing with ADFv2 and in subsequent article I will be discussing more about how to integrate same with Release Manager so that you can have this testing framework as a part of Pipeline Release process to Production.

Code Location

Git – feel free to download and contribute

ADF – Azure Data Factory Learning Path

If you are looking to learn Azure Data Factory (ADF) it’s a good path to start, as it covers various components of ADF like all kind of supported flows with code samples.

I would suggest to start with Portal as you don’t require any coding experience on same and if you are coming from DevOps or DBA background then you can follow PowerShell path, further if you are coming from pure development background then you can use APIs provided by ADF through Nugget.

Here is link of learning path:-

https://azure.microsoft.com/en-us/documentation/learning-paths/data-factory/

Following link shows how Microsoft Studios (XBOX) team is using Azure Data Factory:-

https://channel9.msdn.com/Blogs/raw-tech/Halo-Minecraft-and-More-How-Microsoft-Studios-Processes-Gaming-Event-Data

Happy Learning

Temporary Post Used For Theme Detection (45286bb8-790b-401a-869b-3660278f5b19 – 3bfe001a-32de-4114-a6b4-4005b770f6d7)

This is a temporary post that was not deleted. Please delete this manually. (0cc580d6-e4cf-4859-8a96-2556a3c75393 – 3bfe001a-32de-4114-a6b4-4005b770f6d7)

SSAS Monitoring Tool–SQL 2014 ASTrace

Refer SSAS Monitoring Tool– ASTrace to learn more about ASTrace, this post was pending for a long time as I got tons of email and comment around SQL 2014 and 2016 ASTrace, whith this blog like to mention that I have shared a source code on git so anyone can contribute to same.

Code for ASTrace 2014 is tested with latest build of SQL Server 2014 but for 2016 I had tested with SQL 2016 Preview and didn’t get chance to test with RTM so in coming few days will test and update the code as well if required, but feel free to update if you like as its Git so anyone can contribute.

Here is Git Link – https://github.com/karanspeaks/SQL-Server-Analysis-Services-Samples

Halo, Minecraft, and More! How Microsoft Studios Processes Gaming Event Data

My first video on Channel 9, it talks about how we Handle various Events generated from Games………..

Halo, Minecraft, and More! How Microsoft Studios Processes Gaming Event Data

My first video on Channel 9, it talks about how we Handle various Events generated from Games………..

Azure Data Factory: Detecting and Re-Running failed ADF Slices

Recently I came across a scenario where I need to detect failed slices of all Datasets in Azure Data Factory, in my case I need to detect for last 3 months and the number of slices was around 600+, they failed due to validation error as the source data wasn’t present and after a number of re-try slices were marked as failed.

In such cases its difficult to perform re-run from the Portal as you need to right click on each slice and run it explicitly.

Solution: I wrote a following PowerShell Script, this script will detect all failed slices in a given Azure Data Factory and re-run same with your consent.

You can use same script not only for failed slices but for any status, you just need to change the Dataset status in filtering of slices, shown in following script.

I am also planning to write a solution which will run as a service in a worker role and automatically detect failed slices in a given time and re-run same.

Question can be asked, that in ADF you already have re-run logic they why you need to go through the hassles of writing and running script.

Yes we do have but after x number of re-runs a slices is marked as failed and only way is to run is through portal or programmatically.

So, here is my contribution to ADF Community.

Pre-requisite – Azure Resource Manager PowerShell (https://azure.microsoft.com/en-us/blog/azps-1-0-pre/)

| Copy following code in text file and save it as file.ps1

You can also download the script and save it as PS1 – https://karanspeaks.com/wp-content/uploads/2015/11/re-run-failed-slices-ps11.docClick Here |

|

#Begin Script Login-AzureRmAccount $Subscription="Provide Subscription ID" #Get Dataset names in Data Factory – you can explicitly give a table name using $tableName variable if you like to run only for an individual tablename $tableNames #lists tablenames foreach ($tableName in $tableNames) $failedSlices = $slices | Where {$_.Status -eq ‘Failed’} $failedSlicesCount = @($failedSlices).Count if ( $failedSlicesCount -gt 0 ) write-host "Total number of slices Failed:$failedSlicesCount" foreach ($failed in $failedSlices) } #End Script |

Microsoft Azure Essentials: Azure Machine Learning

Microsoft Azure Essential: Azure ML Free book is pretty informative if you wanted to learn about ML.

Link as follow:

Additionally I recommend to go through free courser from University of Washington available through coursera.

Step by Step course of going in depth of Machine Learning.

Link as follow:

Informative HDInsight Links

PDF Document download link CLICK – Here

|

Tried to collate informative articles related to HDInsight. In comments section please add URLs of article / video which you came across and like me to add it to this list. Links as follow:- Get started using Hadoop with Hive in HDInsight to analyze mobile handset use Get started with HDinsight Emulator Getting started using Storm with HDInsight (Hadoop) Here are the Hadoop technologies in HDInsight: Ambari: Cluster provisioning, management, and monitoring Avro (Microsoft .NET Library for Avro): Data serialization for the Microsoft .NET environment HBase: Non-relational database for very large tables HDFS: Hadoop Distributed File System Hive: SQL-like querying Mahout: Machine learning MapReduce and YARN: Distributed processing and resource management Oozie: Workflow management Pig: Simpler scripting for MapReduce transformations Sqoop: Data import and export Storm: Real-time processing of fast, large data streams Zookeeper: Coordinates processes in distributed systems

|

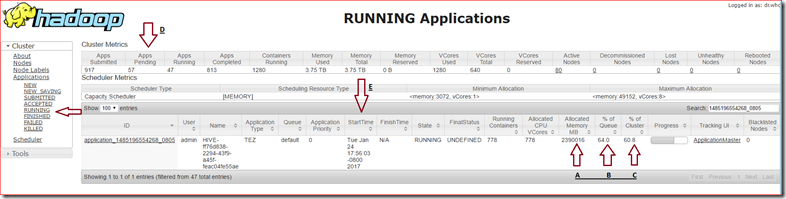

![clip_image001[4] clip_image001[4]](https://karanspeaks.com/wp-content/uploads/2017/01/clip_image0014_thumb.png?w=755&h=462)

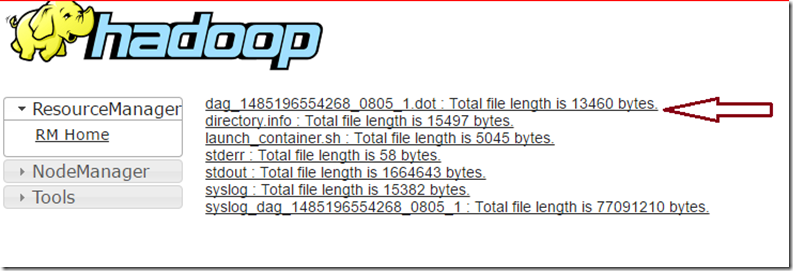

![clip_image002[5] clip_image002[5]](https://karanspeaks.com/wp-content/uploads/2017/01/clip_image0025_thumb.png?w=772&h=455)