Archive

SSAS: All Possible Scenarios of Changing SSAS Data Folder

Title – All Possible Scenarios of Changing SSAS Data Directories

Scenario 1:

Recreating a New Data Folder in New Drive / Same Drive

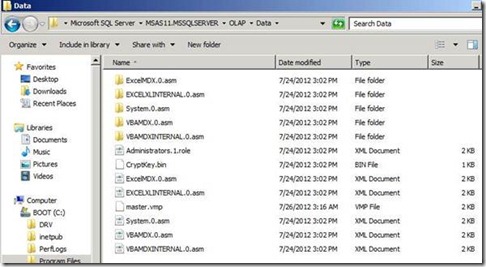

Creating a New Data Folder and changing the Data File Location in configuration files as shown below –

1. Connect to your Analysis Server in SSMS with a user that is a member of the server role but NOT the user that installed the software

2. Right-click on the server in Object Explorer. Select ‘Properties…’

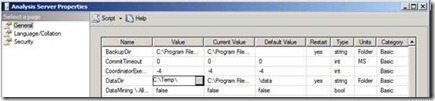

3. On the general tab, change the value of the DataDir property to a different folder (it doesn’t matter what)

4. Click ‘OK’ on “Analysis Server properties” dialog. You will get a message telling you that the server needs to be restarted

5. Restart your Analysis Server in Services panel.

Caveat –

If you change the DataDir property of Analysis Server (i.e. the place that, by default, Analysis Services stores all its data) then you will lose valuable metadata about your installation – namely that you will lose all members of the fixed admin Server Role. The only user who can then connect to Analysis Server and carry out administrative functions is the user that installed the software.

These Screen Shots demos same –

change to c:\temp

request to restart.

Then on Security tab you missed the Server Administrators ….

Simple workaround –

For getting users of Admin Roles you can follow below mentioned steps:

Before applying steps mentioned above take the Script of SSAS Server Admin Roles –

Connect to SSAS

Right-click on the server in Object Explorer. Select ‘Properties…’

Select Page – Security ->On drop down Script ->

Drop Down Script and select Script to a file, now follow below mentioned steps.

1. On the general page, change the value of the DataDir property to a different folder (it doesn’t matter what)

2. Click ‘OK’ on “Analysis Server properties” dialog. You will get a message telling you that the server needs to be restarted

3. Restart your Analysis Server in Services panel.

4. Now log onto the server as the user that originally installed Analysis Services (for this is now the only user that will have server role privelages)

5. Open Script File which we had saved earlier -> Script file will open in XMLA query window.

6. Execute Script and after that you will find all existing users in place.

Scenario 2:

Moving Data Directory of Analysis Services Refer this blog post

In this Scenario, Default Data Folder is V:\Data but while creating partitions developer changed the drive of Partition Directory. In such a case you can use Scenario 2 for Moving Data directory but in this Scenario I will show how to move the Partitions Directory (in case Partition directory got some issues and you need to relocate Partition Files)

Default data folder – V:\Data

Partition 2006 for Sales reside in C:\Partitions

New Location F:\Partitions

First Option:

OPEN Database in BIDS – > Go to Partitions -> In Storage Location Chang the path to new one.

Current-

New –

Before saving ensure that in F drive you have directory with same name, else you will get error stating folder doesn’t exist.

As soon as you hit save you will get this message – so you need to process partition to get it done.

Once you process – you are good, you will get data in new drive-folder.

Second Option:

Yes we can use the database backup and during restore change the drive location for specific Partition but if the Partition file size >4gb and AS is 2005, then you will be in trouble J because there is a limitation that a specific file shouldn’t be >4GB (yes fixed in SQL 2008) J

SSAS Monitoring Tool– ASTrace

Guys, We have noticed customers asking a tool for Monitoring SSAS Activities; So we have added new features to an existing ASTrace of SQL 2005 and introduced a new version for SQL 2008/R2 & SQL 2012(MOLAP and Tabular).

With this tool you can monitor minimum to maximum SSAS Information. Architecture is pretty simple, ASTrace runs as a service on any server which got prerequisite installed (as stated in documentation) -> collect trace information based on trace template for an SSAS Instance stated in configuration file (you can customize and use your own trace template) and finally pushes trace data into a SQL Table enabling you to query this SQL Table and pull required information.

You can customize Trace Template, SQL Table and Database Name. Documentation will provide you an idea of enhancements which we had done in new version of ASTrace.

AS Trace2008 (SQL 2008 / R2)

AS Trace2012 (MOLAP / Tabular)

Caveat- By design

Restarting ASTrace Service –

By design, ASTrace will stop auditing whenever you restart the Analysis Service instance. Therefore, any time that you restart Analysis Services you will also need to restart the ASTrace service.

When the ASTrace service restarts, the service automatically writes the content of the existing table, ASTrace, to a new table with a timestamp. For example:

| Old traces | ASTraceTable_Archive_Tuesday, January 31, 201212:56:35 AM |

| New and current traces | ASTrace |

This workaround is required because trace files are always truncated by the function, InitializeAsWriter. Unless you take action to save the existing trace information, when the service restarts, all previous trace information would be lost. To preserve the trace information prior to the service restart, the ASTrace utility programmatically creates a table, adds a timestamp, and writes any existing trace to the time-stamped table. This lets you maintain a history of events prior to the service restart.

This trigger enables you in collating the result of all ASTrace table and you can run a SQL query for pulling information against single table.

Feel free to ping me if you need any assistance.

Disclaimer

These is a sample tool and will not be supported by CSS.

Dimension: ProcessUpdate – Insight

ProcessUpdate applies only to Dimension. It is the equivalent of incremental dimension processing in Analysis Services 2000. It sends SQL queries to read the entire dimension table and applies the changes—member updates, additions, deletions.

Since ProcessUpdate reads the entire dimension table, it begs the question, “How is it different from ProcessFull?” The difference is that ProcessUpdate does not discard the dimension storage contents. It applies the changes in a “smart” manner that preserves the fact data in dependent partitions. ProcessFull, on the other hand, does an implicit ProcessClear on all dependent partitions. ProcessUpdate is inherently slower than ProcessFull since it is doing additional work to apply the changes.

Depending on the nature of the changes in the dimension table, ProcessUpdate can affect dependent (related) partitions. If only new members were added, then the partitions are not affected. But if members were deleted or if member relationships changed (e.g., a Customer moved from Redmond to Seattle), then the aggregation data and bitmap indexes on the related partitions are dropped. The cube is still available for queries, albeit with lower performance.(with ProcessUpdate Flexible aggregations and indexes on related partitions will be dropped)

After ProcessUpdate you need to Process Indexes on the Partitions. The simple approach of dealing with such problem is to do default processing on associated Cubes, default processing will create dropped Aggregations and Bitmap indexes for affected partitions.

Three Options you can use for building Indexes / Aggregates –

Lazy processing is the mechanism by which the Analysis server automatically builds bitmap indexes and aggregations for dimensions and partitions. Lazy processing is done in the background at a low priority, so it does not interfere with foreground operations such as user queries. The advantage of lazy processing is that it reduces the foreground processing time and the cube is available earlier to users, albeit with lower performance.

2) ProcessAffectedObjects while Processing Dimension (Process Update) – that tells the server to reprocess other objects impacted by this command, benefit of this is All Affected Objects will be process in same Batch.

3) Process Index on Measure Groups or Process Default of a Cube

Scenario A: Update a Product Color (Attribute Key is Color) for a single Product which had a Historical Record in Year 2005 and 2006.

1) If you notice for this specific product got sales only in Year 2005 and 2006

select ProductKey,orderdatekey from testinternestsales

where Productkey=324

2) Created A Test Cube based on TestDate and TestProd-

TestDate –

TestProd

With Color – it got Flexible Relationship.

Four Partitions based on –

2005 WHERE OrderDateKey <= ‘20051231’

2006 WHERE OrderDateKey >= ‘20060101’ AND OrderDateKey <= ‘20061231’ and same was for 2007 / 2008

Dimension Usage

Key Col and Name Col is Color.

3)Auto-Slicing is correct, can be proved by simple query –

Select {[Measures].[Sales Amount]}on 0,[Testprod].[Product Key 1].&[324] on 1

from [Test] WHERE [Test Date].[Calendar Year].&[2005]

4) SELECT * FROM SYSTEMRESTRICTSCHEMA($SYSTEM.DISCOVER_PARTITION_DIMENSION_STAT

, CUBE_NAME = ‘TEST’ , DATABASE_NAME = ‘Adventure Works DW 2008R2’ ,MEASURE_GROUP_NAME = ‘Testinternestsales’

,PARTITION_NAME = ‘2005’)

Checking if Indexes are in Place, if you notice here For Color in all years Indexes are in Place.

5)Also checked in Folder

E:\data\Adventure Works DW 2008R2.0.db\TEST.40.cub\Testinternestsales.40.det\2008.37.prt

E:\data\Adventure Works DW 2008R2.0.db\TEST.40.cub\Testinternestsales.40.det\2005.36.prt

Colro.Map files are in place

6)Fired this query for updating Color for ProductID 324 (As shown earlier it got records only for 2005 and 2006 in Fact Table)

update testprod

set color=’Test Green’

where productkey=324

7) Process Update

Fired same DMV and if you notice Attribute Index for Color is dropped for 2005 as well as 2008

Scenario B: Update a Product Color (Attribute Key is Color) for a product which doesn’t have any record in fact table (No History or Current Record)

You can see all flex Aggregates

Changed – Color of a Product, which does not have any record in Fact table

After Process Update – all flex aggregates are gone only Index files are left that too for all attributes except Color

Scenario C In earlier mentioned Scenario – Color is Key Column, for testing I have changed the Key Col – ColorID and Name Col. Kept as Color.

Changed Color

update testprod set color=’ASAT’ where productkey=609

So in this case, I am updating a color but internally I am referring ColorID as Key Column for this Color Attribute. Which internally is not impacting Index Mapping and hence not dropping indexes / aggregates

If your design is somewhere near to Scenario 1 or 2, Indexes / Aggregates will get dropped but if you are following the practice given in Scenario 3, It will not drop indexes because it’s not impacting the Key Col (and that’s why it’s a suggested practice to use Name Col for Attributes).

Scenario D: Dropped a record from Dimension and shown an impact on Aggregates. as expected its dropped all Attribute Indexes (Map files) and Flex Aggregates

SELECT * FROM SYSTEMRESTRICTSCHEMA( $SYSTEM.DISCOVER_PARTITION_STAT , CUBE_NAME = ‘Test’, DATABASE_NAME = ‘Adventure Works DW 2008R2’ , MEASURE_GROUP_NAME = ‘Testinternestsales’ , PARTITION_NAME = ‘2005’)

Aggregates are present.

SELECT * FROM SYSTEMRESTRICTSCHEMA($SYSTEM.DISCOVER_PARTITION_DIMENSION_STAT

, CUBE_NAME = ‘TEST’ , DATABASE_NAME = ‘Adventure Works DW 2008R2’ ,MEASURE_GROUP_NAME = ‘Testinternestsales’

,PARTITION_NAME = ‘2005’)

Now fired below mentioned query for checking indexes

SELECT * FROM SYSTEMRESTRICTSCHEMA($SYSTEM.DISCOVER_PARTITION_DIMENSION_STAT

, CUBE_NAME = ‘TEST’ , DATABASE_NAME = ‘Adventure Works DW 2008R2’ ,MEASURE_GROUP_NAME = ‘Testinternestsales’

,PARTITION_NAME = ‘2005’)

ProductKey 611 doesn’t existing in Fact Table

This signify that we don’t have record for this guy

delete from testprod

where productkey=611

Now if you notice all flex aggs are dropped you can see size as 0

Indexes – for all attributes are dropped because we have deleted a record and it had impacted all Attributes

*———–End of Article—————-*

![clip_image001[6] clip_image001[6]](https://karangulati.files.wordpress.com/2012/05/clip_image0016_thumb.png?w=428&h=376)

![clip_image002[6] clip_image002[6]](https://karangulati.files.wordpress.com/2012/05/clip_image0026_thumb.jpg?w=563&h=79)

![clip_image003[6] clip_image003[6]](https://karangulati.files.wordpress.com/2012/05/clip_image0036_thumb.png?w=527&h=314)

![clip_image004[6] clip_image004[6]](https://karangulati.files.wordpress.com/2012/05/clip_image0046_thumb.png?w=563&h=52)

![clip_image005[6] clip_image005[6]](https://karangulati.files.wordpress.com/2012/05/clip_image0056_thumb.png?w=550&h=148)

![clip_image002[11] clip_image002[11]](https://karangulati.files.wordpress.com/2012/05/clip_image00211_thumb.jpg?w=493&h=285)

![clip_image001[10] clip_image001[10]](https://karangulati.files.wordpress.com/2012/05/clip_image00110_thumb.png?w=268&h=284)

![clip_image002[13] clip_image002[13]](https://karangulati.files.wordpress.com/2012/05/clip_image00213_thumb.jpg?w=244&h=94)

![clip_image004[9] clip_image004[9]](https://karangulati.files.wordpress.com/2012/05/clip_image0049_thumb.jpg?w=453&h=137)